Postmortem: 2019-03-29 DNS-related Cosmos Hub Validator Incident

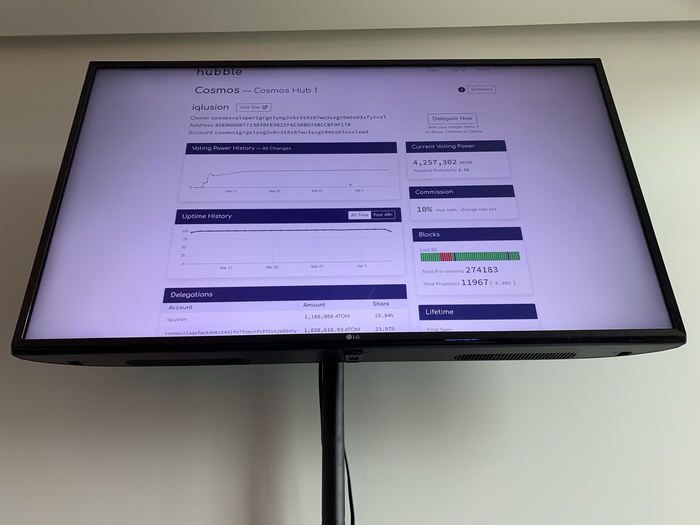

It began with a series of PagerDuty alerts on our phones. We occasionally have false positives, but this was different: several alarms in a row. We looked up at the display in our NOC (above photo, although from a different day) to see that this was not a false alarm: Hubble was also showing we were down. At least our alerts were working!

Though we had been making several changes throughout the day to move onto a new internal sentry architecture, almost all of these changes we additive, so it was surprising to see we had an outage. After hopping onto our redundant validator hosts and checking on the process status, we saw something rather scary: both the active validator and backup validator processes had crashed!

A quick examination of the stack trace found the cause: DNS. We had just deleted a DNS record we thought was no-longer in use, which seemed like a likely culprit. So first thing’s first: we rolled back the DNS change, restoring the record. But something else was still a bit odd: after suffering some DNS-related outages on previous testnets, we had removed all uses of DNS in the validator configuration, so how could a missing DNS record cause our validator to crash?

After the cached NXDOMAIN response expired and the DNS record started resolving again, our validator nodes came back up. We were signing again, but what was the root cause?

We found the source of the DNS record: one of the sentries our validators were connecting to was advertising it as its external_laddr. The offending code in the stack trace was showing a crash inside of the PEX reactor’s address book code. This made it particularly scary as it appeared this failure mode could be remotely exploited as part of a denial-of-service attack.

For that reason we responsibly disclosed the vulnerability upstream, and kept our lips sealed until the fix was released in cosmos-sdk v0.33.2. You can read more about it on the tendermint/tendermint#3522 GitHub issue.

We’ve completed maintenance to update all of our Cosmos Hub nodes, and should now be fully patched against this particular vulnerability. We apologize for taking so long to write this postmortem, but did not want to disclose information about a security-related issue until we had received the all clear from Tendermint to do so.

Incident timeline #

All events occurred on 2019-03-29. All times are UTC.

- 02:39: Validator node

gaiadprocesses receiveSIGSEGVdue to DNS resolution failure - 02:42: Initial alerts informing us about the outage. Hubble confirms we are down.

- 02:44: Discovery of the DNS-related

gaiadcrash - 02:47: Deleted DNS record identified as probable root cause and rolled back to previous configuration

- 02:50: Local DNS caches purged but upstream DNS server still responding with cached

NXRECORD - 02:56: Validator nodes manually restarted after

NXRECORDclears - 02:58: Normal operation resumes

- 03:00: Hubble confirms we are signing again